Portable Autotunable Skeleton Programming Framework

for Multicore CPU and Multi-GPU Systems and Clusters

New (Feb. 2026): Release of SkePU 3.3, with SkePU-DNN and the new SkeVU trace visualizer.

Introduction

Skeleton programming is a high-level (parallel) programming approach where an application is written with the help of (algorithmic) skeletons. A skeleton is a pre-defined, generic programming construct such as map, reduce, scan, farm, pipeline etc. that implements a common specific pattern of computation and data dependence, that might have efficient implementations for parallel, distributed or heterogeneous computer systems, and that can be customized with (sequential) user-defined code parameters. Skeletons provide a high degree of abstraction and portability with a quasi-sequential programming interface, as their implementations encapsulate all low-level and platform-specific details such as parallelization, synchronization, communication, memory management, accelerator usage and other optimizations.

SkePU is an open-source skeleton programming framework for multicore CPUs and multi-GPU systems. It is a C++ template library with (in its current version) eight data-parallel skeletons, four generic data-container types for operands, and support for execution on multi-GPU systems both with CUDA and OpenCL and for execution on clusters.

Main features

Supported skeletons

- Map: Data-parallel element-wise application of a function with arbitrary arity.

- Reduce: Reduction with 1D, 2D, 3D, 4D variations.

- MapReduce: Efficient chaining of Map and Reduce.

- MapOverlap: Stencil operation in 1D, 2D, 3D, and 4D.

- MapPool: Partial block-wise reduction (pooling) in 1D, 2D, 3D, and 4D.

- Scan: Generalized prefix sums computation.

- MapPairs: Cartesian product-style computation.

- MapPairsReduce: Efficient chaining of MapPairs and Reduce.

- (deprecated: Call: Wrapping user-defined parallel C++ code - replaced by skepu::external)

Unless explicitly stated otherwise, these skeletons are fully polymorphic in both: the per-element operator (user function), the operand shape (data-container dimensionality), the operand access pattern, the operand number (arity) for input and output operands, and the element data type.

Multiple back-ends and multi-GPU support

Each skeleton has several different backends (implementations): for sequential C, OpenMP, OpenCL, CUDA, and multi-GPU OpenCL and CUDA. There also exists a (now, elder) variant of SkePU 3 for cluster-parallel execution based on MPI and StarPU, which supports some of the skeletons.

Tunable context-aware implementation selection

Depending on a skeleton call's execution context properties (usually, operand size), different backends (skeleton implementations) for different execution unit types will be more efficient than others. SkePU provides a tuning framework that allows it to automatically select the expected fastest implementation variant at each skeleton call.

Smart data-containers (Vector, Matrix, Tensor)

For passing operands to skeleton calls: SkePU smart data-containers are runtime data structures wrapping and managing arrays used as skeleton call operands. They transparently perform coherent software caching of operand elements in device memories by automatically keeping track of the valid copies of their element data and their memory locations. Smart data-containers can, at runtime, automatically optimize communication, perform memory management, and synchronize asynchronous skeleton calls driven by operand data flow.

Smart data-containers are available as generic data types for 1D vectors, 2D matrices, 3D tensors, and 4D tensors.

Access control for container elements inside user functions

SkePU smart data-containers are typically made available element-wise in user functions. For more complex data access patterns, there can be a need to access multiple elements from a single smart container. SkePU solves this use-case by the proxy containers: Vec<T>, Mat<T>, Ten3<T>, Ten4<T>. These are likewise generic in the element type T.

In addition to whole-container proxy objects, there are further types of generic proxies for partial container access: MatRow<T> and MatCol<T> for matrices (e.g. for iterating through a matrix row in a matrix-vector multiplication), Region1D<T>...Region4D<T> representing the neighborhood around a specific element for each of the four types of smart data-containers (e.g. for stencil weights in an image filter), and Pool1D<T>...Pool4D<T> (block regions for pooling operators).

template<typename T> T mvmult_f(skepu::Index1D row, const skepu::Mat<T> m, const skepu::Vec<T> v) { T res = 0; for (size_t j = 0; j < v.size; ++j) res += m(row.i, j) * v(j); return res; } skepu::Vector<float> y(height), x(width); skepu::Matrix<float> A(height, width); auto mvmult = skepu::Map(mvmult_f); mvmult(y, A, x);

Downloads

Software License

SkePU is licensed under a modified four-clause BSD license. For more information, please see the license file included in the downloadable source code.

System Requirements

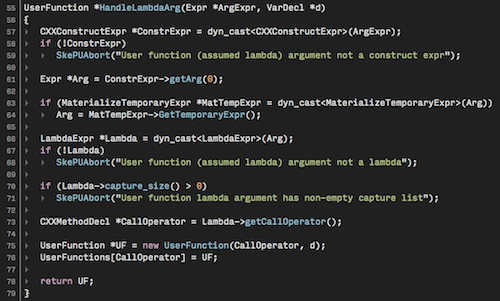

SkePU requires a target compiler compatible with the C++11 standard (or later). Additionally, a system able to both compile and run the LLVM system, including Clang, is required (this needs not to be the target system).

SkePU is tested with GCC 4.9+ on Linux and macOS, Apple LLVM (Clang) 8.0 on macOS.

The following optional features require special compiler support:

- CUDA backend requires CUDA (version 7 or later) compiler and runtime, and hardware support.

- OpenCL backend requires an OpenCL runtime and hardware support.

- OpenMP backend requires an OpenMP-enabled compiler (e.g., GCC).

Example

This example demonstrates SkePU with a program computing the PPMCC coefficient of two vectors. It uses three skeleton instances: sum, sumSquare, and dotProduct and illustrates multiple ways of defining user functions. SkePU's smart containers are well suited to calling multiple skeletons in sequence as done here.

#include <iostream> #include <cmath> #include <skepu> // Unary user function float square(float a) { return a * a; } // Binary user function float mult(float a, float b) { return a * b; } // User function template template<typename T> T plus(T a, T b) { return a + b; } // Function computing PPMCC float ppmcc(skepu2::Vector<float> &x, skepu2::Vector<float> &y) { // Instance of Reduce skeleton auto sum = skepu2::Reduce(plus<float>); // Instance of MapReduce skeleton auto sumSquare = skepu2::MapReduce<1>(square, plus<float>); // Instance with lambda syntax auto dotProduct = skepu2::MapReduce<2>( [] (float a, float b) { return a * b; }, [] (float a, float b) { return a + b; } ); size_t N = x.size(); float sumX = sum(x); float sumY = sum(y); return (N * dotProduct(x, y) - sumX * sumY) / sqrt((N * sumSquare(x) - pow(sumX, 2)) * (N * sumSquare(y) - pow(sumY, 2))); } int main() { const size_t size = 100; // Vector operands skepu2::Vector<float> x(size), y(size); x.randomize(1, 3); y.randomize(2, 4); std::cout << "X: " << x << "\n"; std::cout << "Y: " << y << "\n"; float res = ppmcc(x, y); std::cout << "res: " << res << "\n"; return 0; }

Skeletons

SkePU contains the skeletons Map, Reduce, MapReduce, MapOverlap, Scan, and Call (and several new skeletons are currently under development). They are presented separately below, with a short program snippet exemplifying their usage. In practice, repeatedly calling skeletons and using multiple types of skeletons is encouraged, to fully utilize the advantages of SkePU such as the smart containers.

Map

The Map skeleton represents a flat, data-parallel computation without dependencies. Each sub-computation is an application of the user function supplied to the Map. It works on SkePU containers by, element for element, applying the user function (sum in the example below) multiple times. Any number of equally sized containers can be supplied as arguments; Map will pass one element from each. For more general computations, it is also possible to supply extra arguments as needed which can be accessed freely inside the user function. These can be containers or scalar values.

Map can also be used without element-wise arguments, with the number of sub-computations controlled by the size of the output container only . Programs can take advantage of this, and the fact that the user function can be made aware of the index of the currently processed element, to create interesting use-cases. See, for example, the Mandelbrot program in the distribution.

float sum(float a, float b) { return a + b; } Vector<float> vector_sum(Vector<float> &v1, Vector<float> &v2) { auto vsum = Map<2>(sum); Vector<float> result(v1.size()); return vsum(result, v1, v2); }

Reduce

Reduce performs an associative reduction. Two modes are available: 1D reduction and 2D reduction. An instance of the former type accepts a vector or a matrix, producing a scalar or a vector respectively as the result; the latter only works on matrices. For matrix reductions, the primary direction of the reduction (row-first or column-first) can be controlled.

float min_f(float a, float b) { return (a < b) ? a : b; } float min_element(Vector<float> &v) { auto min_calc = Reduce(min_f); return min_calc(v); }

MapReduce

MapReduce is a sequence of Map and Reduce and offers the features of both, with some limitations, for example only 1D reductions are supported. An instance is created from two user functions, one for mapping and one for reducing. The reduce function should be associative.

As with Map, the possbilities of zero element-wise arity and element-index awarneness in the user function create interesting possibilities. The Taylor program in the distribution uses this to perform Taylor series approximations with zero data movement overhead. A more typical dot product computation is used in the example below.

float add(float a, float b) { return a + b; } float mult(float a, float b) { return a * b; } float dot_product(Vector<float> &v1, Vector<float> &v2) { auto dotprod = MapReduce<2>(mult, add); return dotprod(v1, v2); }

Scan

Scan performs a generalized prefix sum operation, either inclusive or exclusive.

float max_f(float a, float b) { return (a > b) ? a : b; } Vector<float> partial_max(Vector<float> &v) { auto premax = Scan(max_f); Vector<float> result(v.size()); return premax(result, v); }

MapOverlap

MapOverlap represents a stencil operation. It is similar to Map, but instead of a single element, a region of elements is available in the user function. The stencil region is accessible in the user function via a generic RegionXD

A MapOverlap can either be one-dimensional, working on vectors or matrices (separable computations only) or two-dimensional for matrices. The type is set per-instance and deduced from the user function. Different edge handling modes are available, such as overlapping or clamping.

float conv(skepu::Region2D<float> r, const skepu::Mat<float> stencil) { float res = 0; for (int i = -r.oi; i <= r.oi; ++i) for (int j = -r.oj; j <= r.oj; ++j) res += r(i, j) * stencil(i + r.oi, j + r.oj); return res; } Vector<float> convolution(Vector<float> &v) { auto convol = MapOverlap(conv); Vector<float> stencil {1, 2, 4, 2, 1}; Vector<float> result(v.size()); convol.setOverlap(2); return convol(result, v, stencil, 10); }

MapPool

MapPool represents a generic block-wise reduction operation applied on 1D, 2D, 3D and 4D containers. It is similar to MapOverlap in that a block-shaped region of elements is available in the user function, however each block is reduced to a single value so that the result container will have smaller extents (but still same dimensionality). The pool (block-shaped region being reduced) is accessible in the user function via a Pool

MapPairs

A skeleton computing the matrix that results from invoking an operator on all Cartesian pairs of elements from two (sets of) input vectors.

int mul(int a, int b) { return a * b } void cartesian(size_t Vsize, size_t Hsize) { auto pairs = skepu::MapPairs(mul); skepu::Vector<int> v1(Vsize, 3), h1(Hsize, 7); skepu::Matrix<int> res(Vsize, Hsize); pairs(res, v1, h1); }

MapPairsReduce

An efficient chaining of MapPairs and Reduce operations, with the reduction operating along the rows or columns of the intermediate matrix.

int mul(int a, int b) { return a * b; } int sum(int a, int b) { return a + b; } void mappairsreduce(size_t Vsize, size_t Hsize) { auto mpr = skepu::MapPairsReduce(mul, sum); skepu::Vector<int> v1(Vsize), h1(Hsize); skepu::Vector<int> res(Hsize); mpr.setReduceMode(skepu::ReduceMode::ColWise); mpr(res, v1, h1); }

SkePU Standard Library

SkePU provides a set of utility modules for commonly used functionality. These span from utilities like SkePU-aware I/O routines and time measurement via portable deterministic parallel pseudorandom number generation to basic linear algebra and deep learning functionality. They interface with SkePU data-containers and may internally use SkePU skeletons to portably parallelize their computations. Each SkePU standard library module (skepu-headers/src/skepu-lib/*) comes with its own namespace:

- Deterministic Portable Pseudorandom Number Generation (using the skepu::Random<> proxy type for accessing pseudorandom number streams in user functions)

- Complex Numbers (skepu::complex)

- Linear Algebra (skepu::blas)

- Image Filtering and Parsing/Serialization (skepu::filter)

- Time Measurement Utilities (skepu::benchmark)

- Consistent Data-Container Input/Output (skepu::io)

- Convolutional and Deep Neural Network Learning and Inference (skepu::dnn)

- Commonly used simple user functions such as add, max, ... (skepu::util)

Applications

Several test applications have been developed using the SkePU skeletons, including the following:

- Taylor approximation

- a Runge-Kutta ODE solver

- separable and non-separable 2D image convolution filters,

- Successive Over-Relaxation (SOR),

- Coulombic potential grid application

- N-body simulation

- LU decomposition

- Mandelbrot fractals

- Smooth Particle Hydrodynamics (SPH, fluid dynamics shocktube simulation),

- Pearson Product-Moment Correlation Coefficient (PPMCC),

- Mean Squared Error (MSE)

- Median filtering

- Streamcluster (from PARSEC)

- Black-Scholes financial dynamics (from PARSEC)

- Conjugate gradient solver

- Miller-Rabin primality test

- Monte-Carlo π calculation

- Lattice-quantum chromodynamics simulation

- Jacobi heat diffusion

- Molecular dynamics for electrochemistry simulation

- Stereo depth estimation (for SkePU-Streaming)

- Motion detection (for SkePU-Streaming)

The source code for some of these is included in the SkePU distribution.

Publications

2010 - 2011 - 2012 - 2013 - 2014 - 2015 - 2016 - 2017 - 2018 - 2019 - 2020 - 2021 - 2022 - 2023 - 2024 - 2025

2010

- Johan Enmyren and Christoph W. Kessler.

SkePU: A multi-backend skeleton programming library for multi-GPU systems.

In Proc. 4th Int. Workshop on High-Level Parallel Programming and Applications (HLPP-2010), Baltimore, Maryland, USA. ACM, September 2010 (DOI: 10.1145/1863482.1863487, Author version: PDF) - Johan Enmyren, Usman Dastgeer and Christoph Kessler.

Towards a Tunable Multi-Backend Skeleton Programming Framework for Multi-GPU Systems.

Proc. MCC-2010 Third Swedish Workshop on Multicore Computing, Gothenburg, Sweden, Nov. 2010.

2011

- Usman Dastgeer, Johan Enmyren, and Christoph Kessler.

Auto-tuning SkePU: A Multi-Backend Skeleton Programming Framework for Multi-GPU Systems.

Proc. IWMSE-2011, Hawaii, USA, May 2011, ACM (ACM DL)

A previous version of this article was also presented at: Proc. Fourth Workshop on Programmability Issues for Multi-Core Computers (MULTIPROG-2011), January 23, 2011, in conjunction with HiPEAC-2011 conference, Heraklion, Greece. - Usman Dastgeer and Christoph Kessler.

Flexible Runtime Support for Efficient Skeleton Programming on Heterogeneous GPU-based Systems

Proc. ParCo 2011: International Conference on Parallel Computing, Ghent, Belgium, 2011.

In: Advances in Parallel Computing, Volume 22: Applications, Tools and Techniques on the Road to Exascale Computing, IOS Press, pp. 159-166, 2012. (DOI: 10.3233/978-1-61499-041-3-159, author version: PDF) - Usman Dastgeer.

Skeleton Programming for Heterogeneous GPU-based Systems.

Licentiate thesis. Thesis No. 1504, Department of Computer and Information Science, Linköping University, October, 2011 (LIU EP)

2012

- Christoph Kessler, Usman Dastgeer, Samuel Thibault, Raymond Namyst, Andrew Richards, Uwe Dolinsky, Siegfried Benkner, Jesper Larsson Träff and Sabri Pllana.

Programmability and Performance Portability Aspects of Heterogeneous Multi-/Manycore Systems.

Proc. DATE-2012 conference on Design Automation and Testing in Europe, Dresden, March 2012. (PDF (author version), PDF at IEEE Xplore) - Usman Dastgeer and Christoph Kessler.

A performance-portable generic component for 2D convolution computations on GPU-based systems.

Proc. MULTIPROG-2012 Workshop at HiPEAC-2012, Paris, Jan. 2012. (Author version: PDF )

2013

- Usman Dastgeer, Lu Li, Christoph Kessler.

Adaptive implementation selection in the SkePU skeleton programming library.

Proc. 2013 Biennial Conference on Advanced Parallel Processing Technology (APPT-2013), Stockholm, Sweden, Aug. 2013. (DOI: 10.1007/978-3-642-45293-2_13; author version: PDF) - Mudassar Majeed, Usman Dastgeer, Christoph Kessler.

Cluster-SkePU: A Multi-Backend Skeleton Programming Library for GPU Clusters.

Proc. Int. Conf. on Parallel and Distr. Processing Techniques and Applications (PDPTA-2013), Las Vegas, USA, July 2013. (PDF)

2014

- Usman Dastgeer.

Performance-Aware Component Composition for GPU-based Systems.

PhD thesis, Linköping Studies in Science and Technology, Dissertation No. 1581, Linköping University, May 2014. (LiU-EP) - Christoph Kessler, Usman Dastgeer and Lu Li.

Optimized Composition: Generating Efficient Code for Heterogeneous Systems from Multi-Variant Components, Skeletons and Containers.

In: F. Hannig and J. Teich (eds.), Proc. First Workshop on Resource awareness and adaptivity in multi-core computing (Racing 2014), May 2014, Paderborn, Germany, pp. 43-48. (PDF) - Usman Dastgeer and Christoph Kessler.

Smart Containers and Skeleton Programming for GPU-based Systems.

Proc. of the 7th Int. Symposium on High-level Parallel Programming and Applications (HLPP'14), Amsterdam, July 2014. (PDF slides)

2015

- Usman Dastgeer and Christoph Kessler.

Smart Containers and Skeleton Programming for GPU-based Systems.

Int. Journal of Parallel Programming 44(3):506-530, June 2016 (online: March 2015), Springer.DOI: 10.1007/s10766-015-0357-6.

2016

- Oskar Sjöström, Soon-Heum Ko, Usman Dastgeer, Lu Li, Christoph Kessler:

Portable Parallelization of the EDGE CFD Application for GPU-based Systems using the SkePU Skeleton Programming Library.

Proc. ParCo-2015 conference, Edinburgh, UK, 1-4 Sep. 2015.

Published in: Gerhard R. Joubert, Hugh Leather, Mark Parsons, Frans Peters, Mark Sawyer (eds.): Advances in Parallel Computing, Volume 27: Parallel Computing: On the Road to Exascale, IOS Press, April 2016, pages 135-144. DOI 10.3233/978-1-61499-621-7-135. - Sebastian Thorarensen, Rosandra Cuello, Christoph Kessler, Lu Li and Brendan Barry:

Efficient Execution of SkePU Skeleton Programs on the Low-power Multicore Processor Myriad2.

Proc. 24th Euromicro International Conference on Parallel, Distributed, and Network-Based Processing (PDP 2016), pages 398-402, IEEE, Feb. 2016. DOI: 10.1109/PDP.2016.123.

2017

- Christoph W. Kessler, Sergei Gorlatch, Johan Enmyren, Usman Dastgeer, Michel Steuwer, Philipp Kegel.

Skeleton Programming for Portable Many-Core Computing. Book Chapter (Chapter 6) in: S. Pllana and F. Xhafa, eds., Programming Multi-Core and Many-Core Computing Systems, Wiley Interscience, New York, March 2017, pages 121-142. (accepted 2011; this is about SkePU 1)

2018

- August Ernstsson, Lu Li, Christoph Kessler:

SkePU 2: Flexible and type-safe skeleton programming for heterogeneous parallel systems.

Int. Journal of Parallel Programming 46(1):62-80, Feb. 2018 (online: Jan. 2017). doi:10.1007/s10766-017-0490-5

2019

- August Ernstsson, Christoph Kessler:

Extending smart containers for data locality-aware skeleton programming.

Concurrency and Computation: Practice and Experience 31(5), March 2019, Wiley. (Online: Oct. 2018) DOI: 10.1002/cpe.5003 - Tomas Öhberg, August Ernstsson, Christoph Kessler:

Hybrid CPU-GPU execution support in the skeleton programming framework SkePU.

The Journal of Supercomputing, Springer. March 2019 (online). DOI: 10.1007/s11227-019-02824-7 (Open Access)

2020

- August Ernstsson, Christoph Kessler:

Multi-variant User Functions for Platform-aware Skeleton Programming.

Proc. of ParCo-2019 conference, Prague, Sep. 2019, in: I. Foster et al. (Eds.), Parallel Computing: Technology Trends, series: Advances in Parallel Computing, vol. 36, IOS press, March 2020, pages 475-484. DOI: 10.3233/APC200074.

PDF (open access) - Sotirios Panagiotou, August Ernstsson, Johan Ahlqvist, Lazaros Papadopoulos, Christoph Kessler, Dimitrios Soudris:

Portable exploitation of parallel and heterogeneous HPC architectures in neural simulation using SkePU.

SCOPES '20: Proceedings of the 23th International Workshop on Software and Compilers for Embedded Systems, New York, NY, USA. ACM, May 2020, pages 74-77. DOI: 10.1145/3378678.3391889. - August Ernstsson: Designing a Modern Skeleton Programming Framework for Parallel and Heterogeneous Systems. Linköping Studies in Science and Technology, Licentiate Thesis No. 1886, Linköping University, October 2020.

2021

- August Ernstsson, Johan Ahlqvist, Stavroula Zouzoula, Christoph Kessler:

SkePU 3: Portable High-Level Programming of Heterogeneous Systems and HPC Clusters.

Int. Journal of Parallel Programming 49, 846–866 (2021).

2022

- August Ernstsson: Pattern-based Programming Abstractions for Heterogeneous Parallel Computing, Linköping Studies in Science and Technology. Dissertations No. 2205, Linköping University, February 2022.

- Lazaros Papadopoulos, Dimitrios Soudris, Christoph Kessler, August Ernstsson, Johan Ahlqvist, Nikos Vasilas, Athanasios I. Papadopoulos, Panos Seferlis, Charles Prouveur, Matthieu Haefele, Samuel Thibault, Athanasios Salamanis, Theodoros Ioakimidis, Dionysios Kehagias

EXA2PRO: A Framework for High Development Productivity on Heterogeneous Computing Systems.

IEEE Transactions on Parallel and Distributed Systems vol. 33, no. 4, pp. 792-804, 1 April 2022. - August Ernstsson, Nicolas Vandenbergen, Jörg Keller, Christoph Kessler. A Deterministic Portable Parallel Pseudo-Random Number Generator for Pattern-Based Programming of Heterogeneous Parallel Systems. Int. Journal of Parallel Programming, 2022.

2023

- August Ernstsson, Dalvan Griebler, Christoph Kessler. Assessing Application Efficiency and Performance Portability in Single-Source Programming for Heterogeneous Parallel Systems. International Journal of Parallel Programming 51(1), Jan. 2023 (online: Dec. 2022). Springer, Open Access. DOI: 10.1007/s10766-022-00746-1.

2024

- Björn Birath, August Ernstsson, John Tinnerholm, Christoph Kessler: High-Level Programming of FPGA-Accelerated Systems with Parallel Patterns. International Journal of Parallel Programming, May 2024. Springer, Open Access. DOI: 10.1007/s10766-024-00770-3

2025

- Sehrish Qummar, August Ernstsson, Christoph Kessler, and Oleg Sysoev: SkePU-DNN: Algorithmic Skeleton Programming for Deep Learning on Heterogeneous Systems. Proc. 2025 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Milano, Italy, June 2025, pp. 423-432. DOI: 10.1109/IPDPSW66978.2025.00068.

- August Ernstsson, Elin Frankell, Christoph Kessler: Interactive Performance Visualization and Analysis of Execution Traces for Pattern-based Parallel Programming. International Journal of Parallel Programming 53(5):29, Nov. 2025. Springer, Open Access. DOI: 10.1007/s10766-025-00805-3

Ongoing work

SkePU is a work in progress. Future work includes adding support for more skeletons and containers, e.g. for sparse matrix operations; for further task-parallel skeletons; and for other types of target architectures.

If you would like to contribute, please let us know.

Related projects

- MeterPU: Generic portable measurement framework for C++ for multicore CPU and multi-GPU systems

Contact

For reporting feature suggestions, bug reports, and other comments: please email to "<firstname> DOT <lastname> AT liu DOT se".

Acknowledgments

This work was partly funded by EU H2020 project EXA2PRO, by the EU FP7 projects PEPPHER and EXCESS, by SeRC project OpCoReS, by CUGS, by ELLIIT, by SSF, and by Linköping University, Sweden.

Previous major contributors to SkePU include Johan Enmyren and Usman Dastgeer. The multivector container for MapArray (note: obsolete in SkePU 2), and the SkePU 1.2 user guide have been added by Oskar Sjöström.

Streaming support for CUDA in SkePU 1.2 has been contributed by Claudio Parisi from University of Pisa, Italy, in a cooperation with the FastFlow project.

SkePU example programs in the public distribution have been contributed by Johan Enmyren, Usman Dastgeer, Mudassar Majeed and Lukas Gillsjö.

Experimental implementations of SkePU for other target platforms (not part of the public distribution) have been contributed e.g. by Mudassar Majeed, Rosandra Cuello and Sebastian Thorarensen.

The cluster backend of SkePU 3 and later has been contributed by Henrik Henriksson and Johan Ahlqvist. Johan also contributed in several other aspects of SkePU development, including the CMake build system.

Special thanks to the project partners of EXA2PRO who helped shape the design and feature set of SkePU 3.

We also thank the National Supercomputing Centre (NSC) and SNIC/NAISS for access to their HPC computing resources (SNIC 2016/5-6, SNIC 2020/13-113, LiU-gpu-2021-1, NAISS 2025/22-60, NAISS 2026/4-64, LiU-gpu-2023-05).